An AWS IAM Identity Center vulnerability

The short version: AWS IAM Identity Center exchanges third-party OIDC tokens

for Identity Center-issued tokens. Identity Center relies on the jti claim in the

third-party tokens to prevent replay attacks. Identity Center maintained a cache of

previously-seen jti values for a fixed period (24 hours) and didn't enforce that

the third-party tokens had expiry claims. This meant that a token with a jti

claim and without an exp claim could be replayed after >24 hours had passed.

AWS now enforces that these third-party OIDC tokens include an exp claim.

I feel this was a relatively minor issue, because a) few legitimate IdPs issue

tokens that never expire and b) the affected CreateTokenWithIAM API also

required AWS IAM authorization (unlike the public CreateToken API).

Story¶

Around AWS re:Invent 2023, Amazon launched a handful of features relating to making it easier for customers to access their cloud data in a scalable and auditable way. These features span IAM Identity Center (formerly AWS SSO), S3, Athena, Glue, Lake Formation and other services.

The APIs, SDKs and documentation were released over a period of a couple of weeks. I dived in straight away, because I'm interested in any changes to authentication, authorisation or audit logs in AWS. Documentation was a little sparse in the beginning, so I had to learn how to use these APIs through trial and error. Interestingly, had I waited a few days the documentation would have been more complete so I wouldn't have needed to experiment and likely wouldn't have found this issue.

Specifically, IAM Identity Center's trusted identity propagation docs

at the time didn't yet specify that the jti claim in the OIDC token was required.

I had created a hand-written OIDC IdP to trial the functionality - that felt

easier than trying to sign up and learn how to use Okta/Microsoft Entra/other IdPs.

Through a process of trial and error1 I learned that my tokens needed a jti

claim and it had to be unique each time. I also learned that they didn't need

iat (issued at time), nbf (not-before time) and exp (expiry time) claims.

I got distracted by a different issue2 (wherein the AWS docs provided

an incorrect ARN) and chased down a suspicion that there was a security issue

with that. That theory went nowhere, so it took a few days (and chatting with

peers) to realise that the combination of relying on unique jti claims + no

expiry claims could only work if IAM Identity Center maintained a cache of

every jti it had ever seen, forever. So I created a token, exchanged it and

saved it to disk. Two days later I tried it again and it worked again. The docs

said there was replay prevention, so this should have failed: bug confirmed.

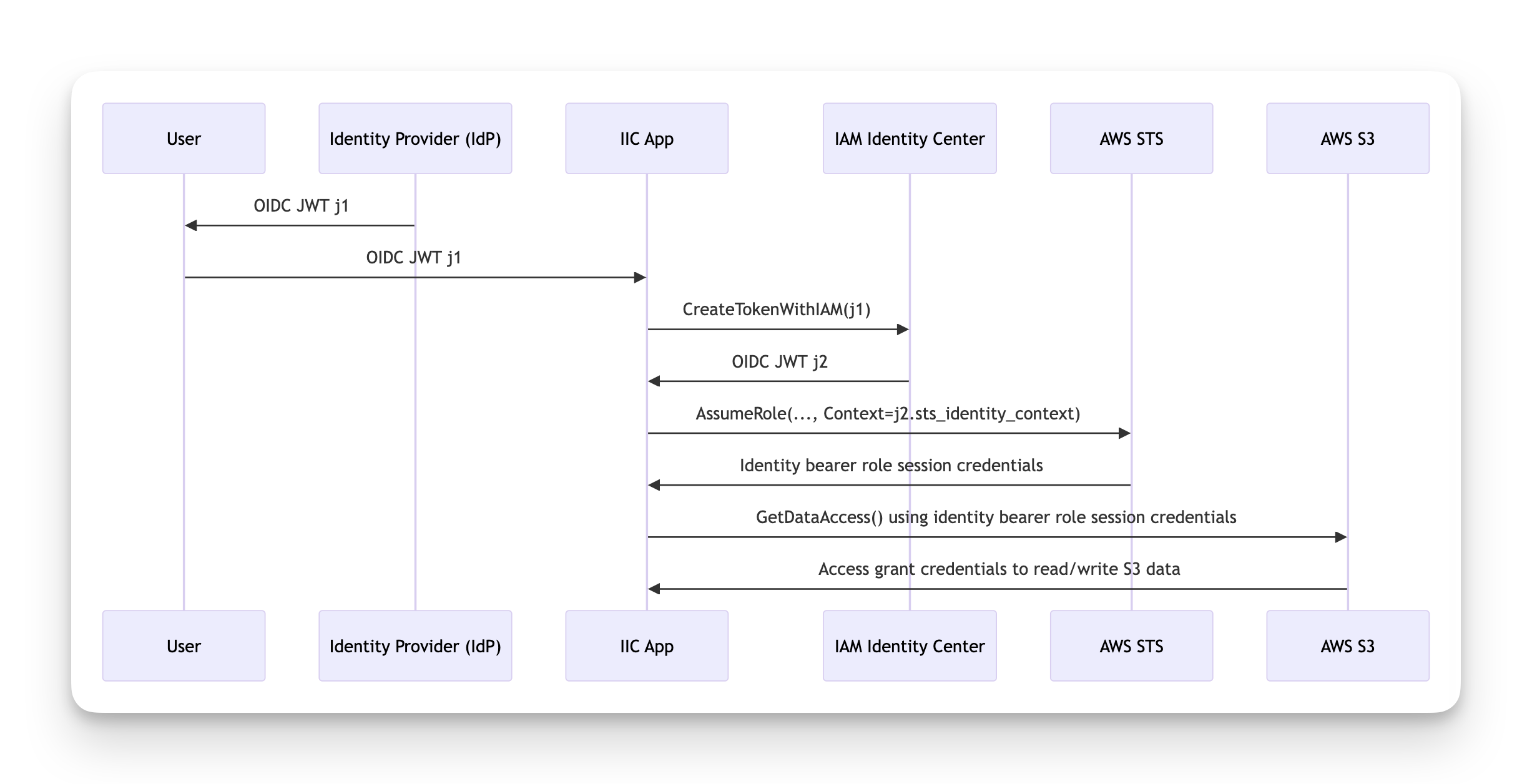

If you like sequence diagrams (who doesn't?), here's one that represents the flow.

The problem was that the OIDC JWT token named j1 could be replayed after 24

hours:

Remediation¶

I emailed the AWS Security team with this finding on December 1st, while re:Invent was still on. They got back to me less than 12 hours later with an initial (human) confirmation of my email. I got a follow-up email on December 9th to confirm that they were still actively investigating the issue. On December 15th, I got an update to say that fixes for this issue had been deployed to all regions. That's a very quick turnaround for a small issue reported during re:Invent.

Thanks¶

Thanks to Ian Mckay, Nathan Glover and Brandon Sherman for sanity-checking this blog post. It was even harder to read before their helpful advice.

Footnotes¶

-

My tokens kept getting rejected, so I was randomly trying all kinds of permutations: tokens with

audclaims and withoutsubclaims, tokens with bothaudandsubclaims, tokens with asubclaim and noexpclaim, etc. It was slow work. I eventually tried addingjtiandnbfclaims - and it worked! I then tried without annbfclaim - it failed. It took a while before I realised that failed because I had re-usedjti, not because of the missing timestamp claims. ↩ -

I also solved this issue through trial and error by trying all plausible ARNs. I eventually stumbled on one that worked and tweeted about it. On November 29th I received an email from the AWS Security Outreach team to say that they'd seen my tweet, fixed all the docs and that the correct ARN was in fact something different. How many giant corporations can ever react that quickly to a documentation issue, let alone during their annual conference? Super impressive. ↩